The Real AI Race Isn't Benchmarks—It's Who Can Afford to Industrialize Intelligence at Scale

Everyone's talking about AI transforming business. Few are asking the obvious question: At what price? For AI to become true economic infrastructure—like power, like the internet—companies need to know what it costs. Not today. Sustainably. And when you look past the benchmarks and the hype, the factors that will actually determine AI pricing are barely part of the conversation. They should be. Because betting your company on today's API costs might be one of the riskiest strategic decisions you can make.

The Real Question about Scaling AI

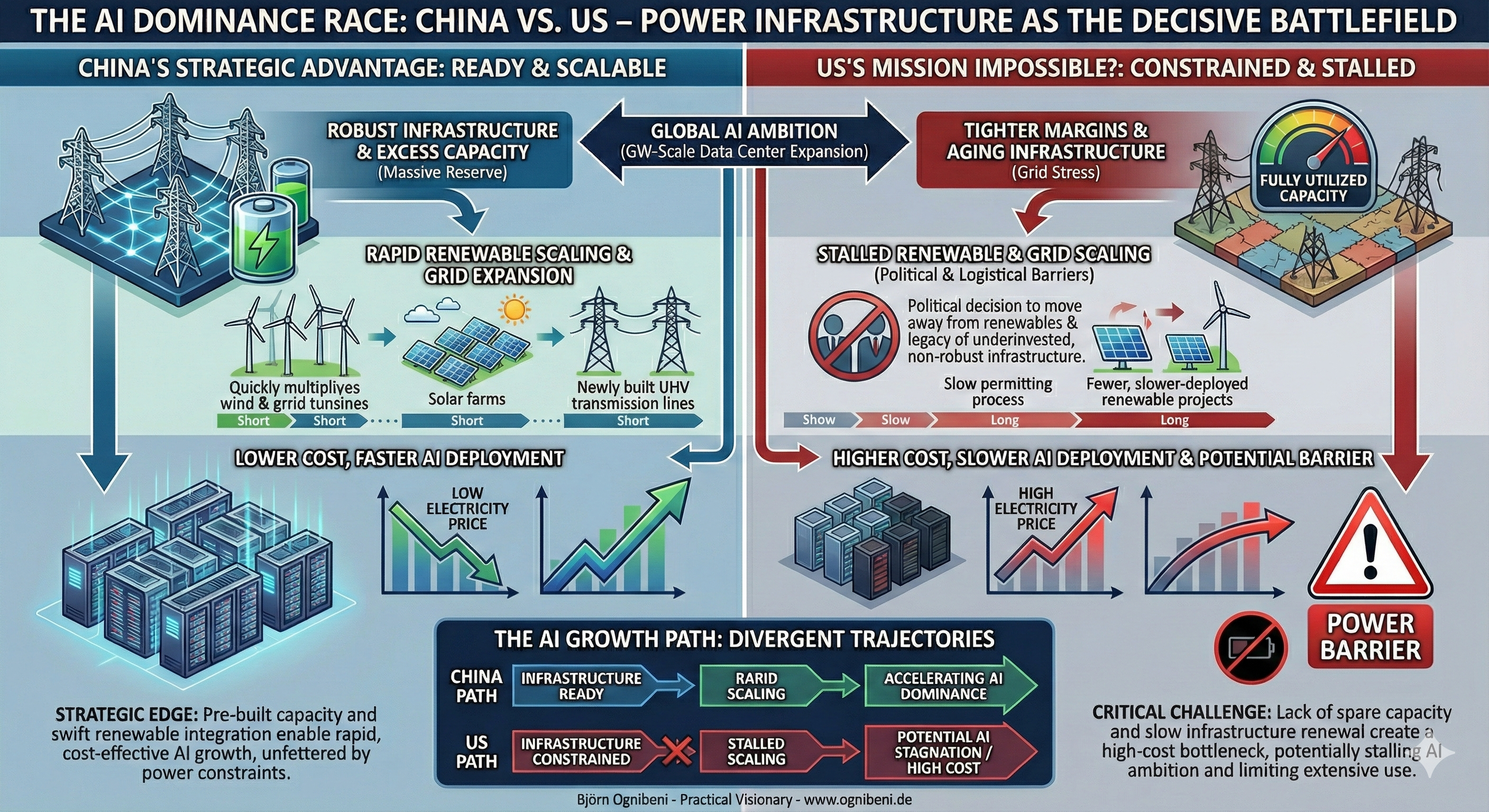

While everyone's focused on who has the best AI models, there's a more fundamental battle that gets largely overlooked: who can actually power the AI revolution?

Both the US and China are racing to build massive data centers—we're talking hundreds of gigawatts of new capacity. But here's where it gets interesting: Building a data center takes months. Building the power infrastructure to run it? Takes years or even decades.

China has spent the last two decades building robust power infrastructure with significant excess capacity. They deploy renewable energy at unprecedented speed. When a new AI data center comes online in China, the power is already there. And it is cheap.

The US? Different story. Aging grid infrastructure. Complex regulatory environments across 50 states. And perhaps most critically: diminishing political will for the large-scale renewable energy projects needed to power AI's exponential growth.

The Energy Tax Nobody Voted For

But here's the uncomfortable question nobody's asking: Is there even enough power in the US? And if AI data centers get priority access to a constrained grid, what happens to everyone else? Ordinary citizens. Traditional manufacturers. Small businesses. Energy prices don't exist in a vacuum—when Big Tech competes for scarce megawatts, the rest of the economy pays the price. The AI boom could become an energy tax on American industry and households.

So, this isn't just about who has more money to build data centers faster. It's about who can afford to run them. AI compute costs are fundamentally tied to electricity prices and we might be watching the emergence of an AI economic moat that has nothing to do with algorithms or talent, and everything to do with kilowatt-hours.

Silicon Valley has the innovation. But Beijing might have the infrastructure to industrialize it at scale. And this infrastructure gap creates a "Volatility Trap" that is much bigger than OpenAI, Google or Meta.

Today's Prices Are a Mirage

Here's what most people miss: Today's API prices aren't real. They're subsidized by a trillion-dollar bubble that hasn't paid off yet. Wall Street is financing the infrastructure buildout at a loss, betting on future dominance. Western companies building AI dependencies at current rates are budgeting against a mirage. The moment that bet sours, the subsidy vanishes and real economics kick in—which means sharply rising API costs.

The AI+ policy in China aims to bring industrial production to a whole new level using AI and fully digitized organizations. It's all about using AI as a fundamental platform for business. But this strategy only works if input costs are stable.

In the US, the collision of physical scarcity (limited power) and financial speculation (trillion-dollar valuations) creates a dangerous environment for industrial dependency. When compute is constrained by the grid and priced by Wall Street expectations, API costs become unpredictable. If the stock market falls out of love with the AI narrative, or if the grid hits its limit, API prices will skyrocket to protect margins.

In China this likely won't be an issue because the power is abundant and Chinese AI companies like Alibaba, DeepSeek and Moonshot are optimizing for industrial scale and efficiency, not just benchmarks. Their prices may be similar today—but they're probably much more sustainable, because they have the actual infrastructure economics already in mind, not speculative capital waiting to exit. But also supported by an energy system built by a strategic-thinking government with deep pockets.

Why This Isn't Dark Fiber 2.0

And don't expect to buy the dip. This isn't like dark fiber after the dot-com crash, where investors snapped up infrastructure for pennies and waited for demand to catch up. Data centers face treadmill economics: they constantly need new chips—not because the old ones stop working, but because newer chips are more energy-efficient. In a power-constrained world, efficiency isn't optional. It's the price of staying competitive.

The same applies to AI models: today they're optimized for benchmarks, but tomorrow efficiency will be the only metric that matters. There's no buying cheap abandoned data centers and hoping to use them. This technology demands constant, ongoing investment just to stand still.

The Reckoning

This begs the question: Can Western industrial companies really bet their manufacturing future on API prices from firms that are fighting a war against their own power grid—while being propped up by a financial bubble—while running on a technology treadmill that never stops?

And what happens to US stock market valuations once Wall Street starts asking if the physical reality of the grid will force a hard stop on the digital fantasy of infinite growth?